Artificial intelligence has led to better marketing, better customer service, and arguably a better quality of life for consumers. But it’s also given cybercriminals a shiny new weapon to add to their arsenal: deepfakes.

While a relatively new threat that hasn’t expanded into the banking industry yet, this new weapon could pose serious risks for community financial institutions and their account holders in the future. Without further ado, let’s take a deep dive into deepfakes.

What are deepfakes?

“Deepfake” is a slang term coined in 2017. It described crude pranks that began running rampant on the internet, mostly targeting celebrities and politicians, that swapped their faces and voices onto different bodies to show them performing actions or saying words that they hadn’t. The videos and audio created by deepfakes fall into a category called synthetic media.

In the last few years, AI and this type of synthetic media has been used within internet culture as an entertaining, harmless way of taking a stroll through the uncanny valley. You may have even used it yourself — do you have a face-swap app on your phone or use a platform like Snapchat? This is the same type of technology that’s behind deepfakes.

Companies have also used this technology to create CGI models for their campaigns. Some of these robo-models have even developed huge social media followings by posting about their “lives” (yeah, we’re creeped out too).

How could deepfakes affect the banking industry?

Think about it: if AI can be used to transpose someone’s face onto a different body, clone voices, and even create entirely synthetic, lifelike identities, what’s to stop a tech-sophisticated criminal from performing high-impact identity fraud, like opening fake checking and savings accounts or credit cards? Some in the financial industry view this as an inevitability.

Deepfakes can use a number of techniques to commit identity fraud, including:

-

Voice phishing (vishing),

-

Fabricated private remarks,

-

Synthetic social botnets,

-

3D facemasks, and

-

Pre-recorded videos.

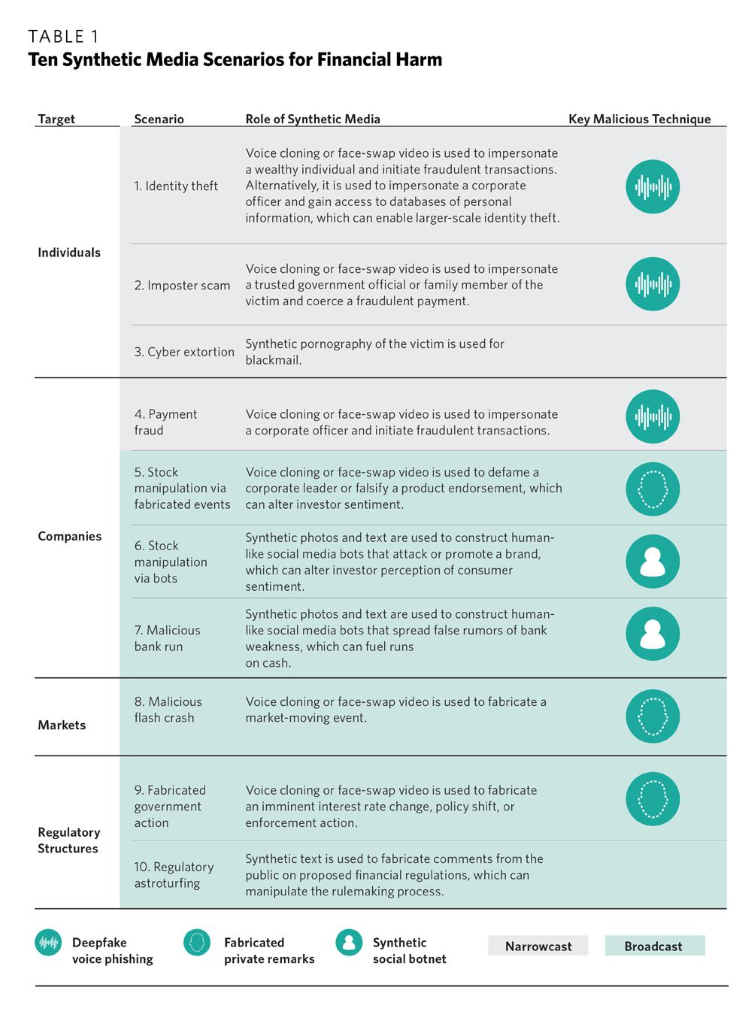

The Carnegie Endowment for International Peace recently conducted extensive research into the potential threats deepfakes pose against financial institutions, businesses, and society as a whole by looking at 10 possible scenarios. Here’s an overview of the threats they believe may be just around the corner:

Photo courtesy of the Carnegie Endowment.

Both your account holders and your institution could be at risk of falling victim to deepfakes. As you can see in the first scenario, vishing used by cloning an authority figure’s voice, such as a loan officer at your institution, could lead to a consumer giving up their sensitive personal information to a bad actor. Voice cloning and face-swap videos could also be used to initiate fraudulent payments.

In other scenarios, social media bots, like the CGI models we mentioned above, could use their platforms to do damage to a financial institution’s reputation with consumers or even stakeholders by spreading false information to their followers. These bots could also potentially open fraudulent accounts using stolen or falsified identities.

How can you protect your financial institution against deepfakes?

Right now, deepfakes are a new frontier for cybercriminals. It would take a sophisticated criminal with a lot to gain to go about the trouble of creating a convincing deepfake, so the chances of something like this infiltrating your bank or credit union are slim. However, as the technology becomes more ubiquitous and easier to use, the risk could increase. Implementing safeguards now is a smart idea to combat any threats that lie ahead.

If you have digital, consumer-facing tools, like online account opening, be sure that they include built-in identity verification. 3D liveness detection is also a good way to combat deepfakes. It uses biometric authentication to determine if there is a real person sitting in front of a computer or using a smartphone, making it virtually impossible for a botnet or someone using a 3D mask to get past.

And remember that awareness can be a great defense against burgeoning threats like these. Make sure your team and account holders are aware of the possibility of deepfakes and offer your account holders regular security reminders.

For example, if your loan officers would never call a borrower to ask for their personal information, be sure all your borrowers know that. That way, they can be wary if someone calls claiming to be with your institution and asking for that type of information — even if that person has the voice of someone they’ve talked to before.

As with any security threat, vigilance is key. We’ll continue to keep an eye on deepfakes and alert you to any risks they may pose to your institution.